Group 15

Group members holding their first protytype of bow frame used for

weight and size testing

A HIT: Archery Haptic Interface for Training

Project team member(s): Kat Li, Mabel Jiang, Bolin Huang, Gadi Sznaier Camps

A HIT is a true-to-life haptic bow with graphical interface for archery training. Archery is a fun, challenging sport that requires dedicated open space that not many people have easy access to - A HIT *aims* to make archery practice more accessible and portable by proving users with a bow with a realistic draw and fun interface that helps users develop an intuition for how the bow string draw length affects arrow trajectory. The bow contains two motors attached to the bowstring that allow for many types of haptic rendering. In our current implementation, the motors provide increasing force to the bowstring as the user draws the bow further back to mimic a real bow. Our Processing display then calculates the trajectory of the arrow given a certain draw length and displays the bow, arrow, arrow trajectory, and target to the user. Combined, the bow and display allow the user to fire a virtual arrow in a fun, intuitive way to practice archery without needing to drive to a range.

On this page... (hide)

Introduction

Archery requires high skill both to be able to accurately hit a target at varied distances. Besides potentially causing safety risks for people much farther down range of the target, this can also cause participants that are new to the sport to become discouraged before they become proficient enough to hit a target. In addition, archery is a sport that requires large open spaces and can be challenging for those with disabilities that make it difficult for them to go to an archery range. Therefore, for this project we propose to create an archery training haptic device that can be used to practice both aiming the bow at a target and pulling the bowstring back to an appropriate draw length for the desired target distance.

Background

Using haptic devices to help train new archers is a well explored topic in the literature. Within the literature, a common approach is to use a physical bow as a physical and visual aid. This bow is then modified to contain sensors that gather positional information which is in turn used to guide the haptic interface that the user interacts with.

One approach creates a haptic integrated VR experience by using the weight, shape, and force characterization of various real traditional bows to recreate the feeling of drawing a bow when the user uses their haptic device. To provide the desired feeling, a wire is threaded through the arrow guide of the bow and is used to deliver the kinesthetic feedback of drawing the bow. The amount of force applied to the user is then determined based on how far the user pulled the bow string back as recorded through a electric single motor attached to the bottom with a rotary encoder. Although this approach is effective at giving the feeling of drawing a bow, it makes for a poor teaching aid as it does not provide any information regarding posture or how far the user should pull back to hit the desired target. In addition, most of the weight of the bow is on the bottom, which makes the bow unbalanced and in turn makes the user more likely to learn to aim the bow too low [1].

Another approach uses a low cost tracking system to capture the userís motion as they go through the process of firing the haptic bow and then correct any mistakes found in their posture. To enhance the userís experience when using their device, they tracked the userís view point to provide 3d spatial cues to increase the feeling of being outside, and used advanced audio-visual representation of scene objects to enhance the hedonic qualities. Hints on how to correctly handle the bow and optimize their attempts in archery are provided based on tracked data. Although this design has only been tested with its prototype version and a small number of participants were used to evaluate the product, analysis has shown that motion captured animation and viewpoint tracking have provided a believable experience for the users [2]. One issue with this approach however, is that it requires the user to wear tracking markers and use a large fixed tracking system to obtain the necessary position information. This makes it largely unsuitable for use outside specialized training facilities.

Another related work implements a wearable multi-dimensional skin stretch display on the forearm for immersive VR haptic feedback. One drawback of this design is that the device is heavy and therefore can restrict userís movement and also cause pressure on skin and make it hard to stretch, but the researchers resolved it with a counter-stretching mechanism without grounding the display to the forearm with a large frame. This device is mainly composed of several stretch modules, where feedback to the arm is provided. With this device, users not only experience feedback while actively pulling the bow, but also while the bow is in a stationary state due to bow tension. When the bow is pulled, stretch modules on the forearm are designed to contract in proportion to the distance the arrow is pulled to provide stretch feedback. [3]

Similar wearable devices for motion capture and force feedback have been explored and an inexpensive and lightweight mechanical exoskeleton has been achieved. Itís composed of controller, force feedback units, joints, and finger caps. This device uses a passive force feedback, thereby significantly reducing the size by integrating all drivers in one single device, and reducing the weight and manufacturing cost. The rigid exoskeleton resembles the situation where a personís hand touches a real object, the hand is prevented from moving forward, by exerting an opposing force on the user's fingertips as force feedback.

Methods

Hardware Design and Implementation

Bowstring Mechanism:

The bowstring mechanism uses a capstan drive and pulley mechanism to extend and retract the bowstring with the mechanism located at the top and bottom of the bow. The dimensions and ROM of the capstan pulley and bowstring pulley were determined based on the desired draw length, with a constraint to keep the capstan rotation < 360 degrees.

- Motor from hapkit

- Hapkit board with MR sensor for motor position

- Capstan + bowstring pulley (custom 3D printed)

- Pulley shaft (same as hapkit)

- Motor pulley (same as hapkit)

- Mounting bracket (custom 3D printed)

- Capstan cable

- Bowstring cable

- Various screws and spacers for mounting

Bow Body:

Originally, the bow body was designed such that the height of the bow would be 62" to meet the bow length requirement for 25" draw length. However, when this first iteration was prototyped, the bow was far too heavy for a user to lift. The second and final iteration of the bow body shortened the bow height to 50" and shrunk its width to 3" in order to strike a balance between realistic bow size and overall weight.

The bow was laser cut from 1/4" MDF board with two layers connected by spacers along the upper and lower limbs of the bow for added stiffness. The handle was constructed out of 3 layers of board sandwiched together. The bow body is attached to the bowstring mechanism using spacers and screws.

We also added a counterweight in order to help balance the bow because the bowstring mechanism generates a torque about the handle due to its weight which is uncomfortable for the user.

System Analysis and Control

Haptic Rendering:

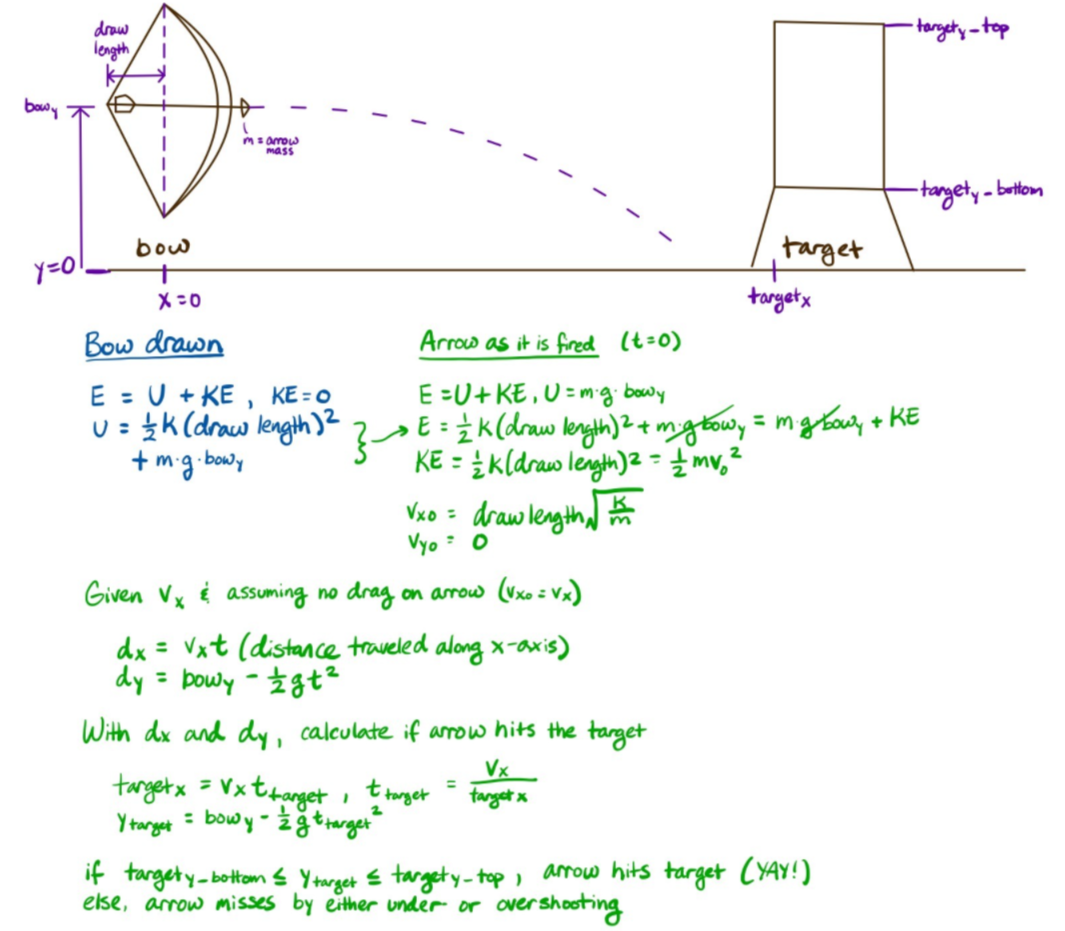

A virtual spring is applied to the bowstring which requires more force from the user as the string is pulled further back. This virtual model also returns the bowstring to the retracted, undrawn position when released.

Arrow Trajectory Planning:

The arrow is modeled as a point mass given an initial velocity along the x-axis upon release from the potential energy stored in the bow. From this initial velocity, the arrow flight trajectory is calculated as follows:

Demonstration / Application

The display, which is modeled in Processing, consists of:

- The bow with bowstring

- The virtual arrow

- The arrow trajectory

- The target

- A countdown timer

Processing continuously reads the draw length of the bow from the hapkit (calculated during the haptic rendering step). The code calculates the arrow trajectory from this value and as the user changes the draw length, the display updates the bowstring, arrow, and trajectory drawings in real time.

Every 3 seconds after users draw the bowstring still at a position, the arrow is virtually fired automatically for the user. The arrow flight is displayed now instead of the trajectory which allows the user to see whether or not they hit the target. To ensure that the arrow only fires when the user is ready, the arrow is prevented from firing when the draw length is zero and the firing timer resets if the user readjusts the draw length of the bowstring more than threshold. Once the arrow is fired, the virtual arrow is reloaded and the process repeats.

Results

Bow Realism

The physical bow has very similar dimensions to a real traditional bow. With the haptic rendering algorithm as is, the bow draw feels very realistic and smooth with a discernable increase in the force required to pull the bowstring along the entire draw length. However, the bow itself is quite heavy given the material limitations for the bowstring mechanism, and as a result, the bow is difficult to hold up for long periods of time. Additionally, the bowstring does not fully return to the neutral, retracted position when the string is released due to the high friction and inertia present in the capstan drive.

Virtual Environment and Display

The display looks very compelling, and the continuously updated trajectory allows the user to immediately understand where the arrow will land as they adjust the bow draw length. Although the firing is not tied to the user releasing the bowstring due to algorithm and sensing limitations, the automatic firing cycle is timed for the user to aim the target, release and re-draw the bow each time comfortably.

User Study

Using our bow and display interface, users were quickly able to shoot an arrow and hit the target within one or two shots and learn the correct position to "fire" the bow. In addition, the users in general had a positive reaction when interacting with the bow and commented that it worked well to demonstrate the process of firing a bow. However, a common incorrect action attempted by the users was to first try to fire by physically releasing the bowstring, thus resulting in the arrow not being properly fired in the virtual environment. This issue was quickly resolved after describing the proper firing procedure. Another common comment was the desire to include more degrees of freedom into the haptic interface thus allowing the user to be able to change the arc of the arrow. In the future, this can be resolved by adding a IMU to the bow which tracks the user's orientation. There are also comments about the bow being relatively heavy, which kept them from trying more draws after holding the bow for around 3 to 4 firing attempts.

Future Work

This haptic bow can be improved in several ways. Most importantly, the weight of the bowstring mechanism can be reduced so that the bow is lighter, less unbalanced and more realistic. We were hoping to reduce the weight, but due to time and material limitations, we weren't able to update the mechanism to a lighter design and 3D print material. There are several areas where the bowstring can catch due to protruding screws used to secure the capstan cable - eliminating these protrusions would make drawing the bow much smoother and reduce the need for additional parts to keep the string in place. The pulley can be refined with deeper channel or additional string protecting part, which can help prevent bowstring from coming out from the channel when drawing and releasing are wilder than expected. The bowstring mechanism also does not rotate freely at low motor torques, so reducing drive friction, inertia, and binding could help create responsive bowstring action even at low motor torques.

The haptic rendering as-is is relatively simple. In the future, virtual walls or virtual bumps and valleys could be added in order to help the users feel when they have reached an appropriate draw length in order to hit the desired target. The distance to target can also become adjustable for users to practice their aims.We can also add sensors for additional degrees of freedom to the arrow simulation. An IMU can measure the angle of the bow and arrow relative to the x-axis which will allow the arrow to be aimed up or down depending on the height and distance of the target. On the software side, we can implement an arrow release algorithm to determine whether or not the user physically releases the bowstring with the intent to fire the arrow. This would make the archery simulation feel more realistic since the virtual arrow then would match user's actions in the real world.

Acknowledgments

We would like to thank Professor Allison Okamura for valuable feedbacks on refining our project prototype and redirecting our project goals. It is our pleasure to be able to discuss our project ideas with her and receive her kind suggestions.

Files

CAD Models of Bow Body, Pulley and Motor Related parts: Attach:team15_cadfiles.zip

Arduino Code for Controlling Bow Draw Strength: Attach:Arduino_Control_Bow_Draw_Strength.zip

Processing Code for Visualizing the Virtual Environment: Attach:Archery_Target_practice.zip

References

[1] Butnariu, Silviu, M. Dugulean, Raffaello Brondi, C. Postelnicu, and M. Carrozzino. "An interactive haptic system for experiencing traditional archery." Acta Polytechnica Hungarica15, no. 5 (2018): 185-208. http://acta.uni-obuda.hu/Butnariu_Duguleana_Brondi_Girbacia_Postelnicu_Carrozzino_84.pdf

[2] GŲbel, Sebastian, Christian Geiger, Christin Heinze, and Dionysios Marinos. "Creating a virtual archery experience." In Proceedings of the International Conference on Advanced Visual Interfaces, pp. 337-340. 2010. https://dl-acm-org.stanford.idm.oclc.org/doi/pdf/10.1145/1842993.1843056

[3] Shim, Youngbo Aram, Taejun Kim, and Geehyuk Lee. "QuadStretch: A Forearm-wearable Multi-dimensional Skin Stretch Display for Immersive VR Haptic Feedback." In CHI Conference on Human Factors in Computing Systems Extended Abstracts, pp. 1-4. 2022. https://dl.acm.org/doi/abs/10.1145/3491101.3519908

[4] Gu, Xiaochi, Yifei Zhang, Weize Sun, Yuanzhe Bian, Dao Zhou, and Per Ola Kristensson. "Dexmo: An inexpensive and lightweight mechanical exoskeleton for motion capture and force feedback in VR." In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, pp. 1991-1995. 2016. https://dl.acm.org/doi/abs/10.1145/2858036.2858487

Appendix: Project Checkpoints

Checkpoint 1

Completed the mechanical design for the bowstring mechanism. It consists of the hapkit motor + board mounted to a capstan pulley similar to the hapkit which allows for 240 degrees of rotation. The bowstring is also fixed to the pulley which allows for the bowstring to wind/unwind as the user pulls on it. The dimensions of the pulley and the mounting points for the bowstring and the capstan cable were chosen to enable a 24" draw length. We have ordered hardware on McMaster and the custom parts are currently being 3D printed.

We still need to complete the integration features with the bow body.

Also completed the mechanical design for the main bow body. It consists of the lighter upper part, handle, and lower part, to have better balance when being held by the users. The total length of bow body was chosen to meet the requirement for 24" drwa length. The design is under adjustment with bowstring mechanism, and will be sent to laser cutting once they are finalized.

Coding Decisions:

For two motor-encoder setups on the arrow we decided to implement a mass spring dampener system that causes the force to increase the more the user pulls the string back. The dampener is used to cause the string to stop oscillating once it reaches equilibrium point. Because there will be two devices on the top and bottom of the bow, we will have each apply half of the overall force so that sum of the force applied is equivalent to the desired force. Since the force that the string should apply the bow will be the same for both devices, only one board will communicate with the graphical interface on the computer. This is to make the communication code easier. Also we will send both the string position and the calculated string force to the graphical interface. This will make it easier to draw the desired dynamics.

Visualization Decision:

We will use processing to visualize the trajectory of the arrow as it goes toward the destination. The final destination will be based on the force sent by the motor encoder setups. The arc of the arrow will be based on the ballistic trajectory of a thrown ball. This is so that we do not have to consider lift caused by the arrow shape. Here is a rough sketch of how it will look:

Checkpoint 2

Bow prototype:

Laser cut and assembled our first prototype to test whether it met our expectations in overall size and weight.

Its length and weight are slightly difficult to hold for some people based on our trials. We decided to scale it down for a more portable design, which is lighter with less materials, easier for users to hold the bow and draw the string simultaneously without the necessity of external supports. Will be laser cut the new parts and test again soon.

Code and GUI:

Determined the equations needed to compute the initial velocity imparted to the arrow. The derivations is as follows:

where T is the kinetic energy and E is the potential energy. Most of the virtual display is completed. We have a virtual bow, arrow and target. All that is is missing is to simulate the arrow once released and show the predicted trajectory of the arrow when still attached to the bow. Will attached a video later as forgot the Haptic device in the ME lab.

Also wrote the Arduino code necessary to simulate the bow drawback. Still need to calibrate arduino so that we can use the new capstan drives for the bow.