2024-Group 7

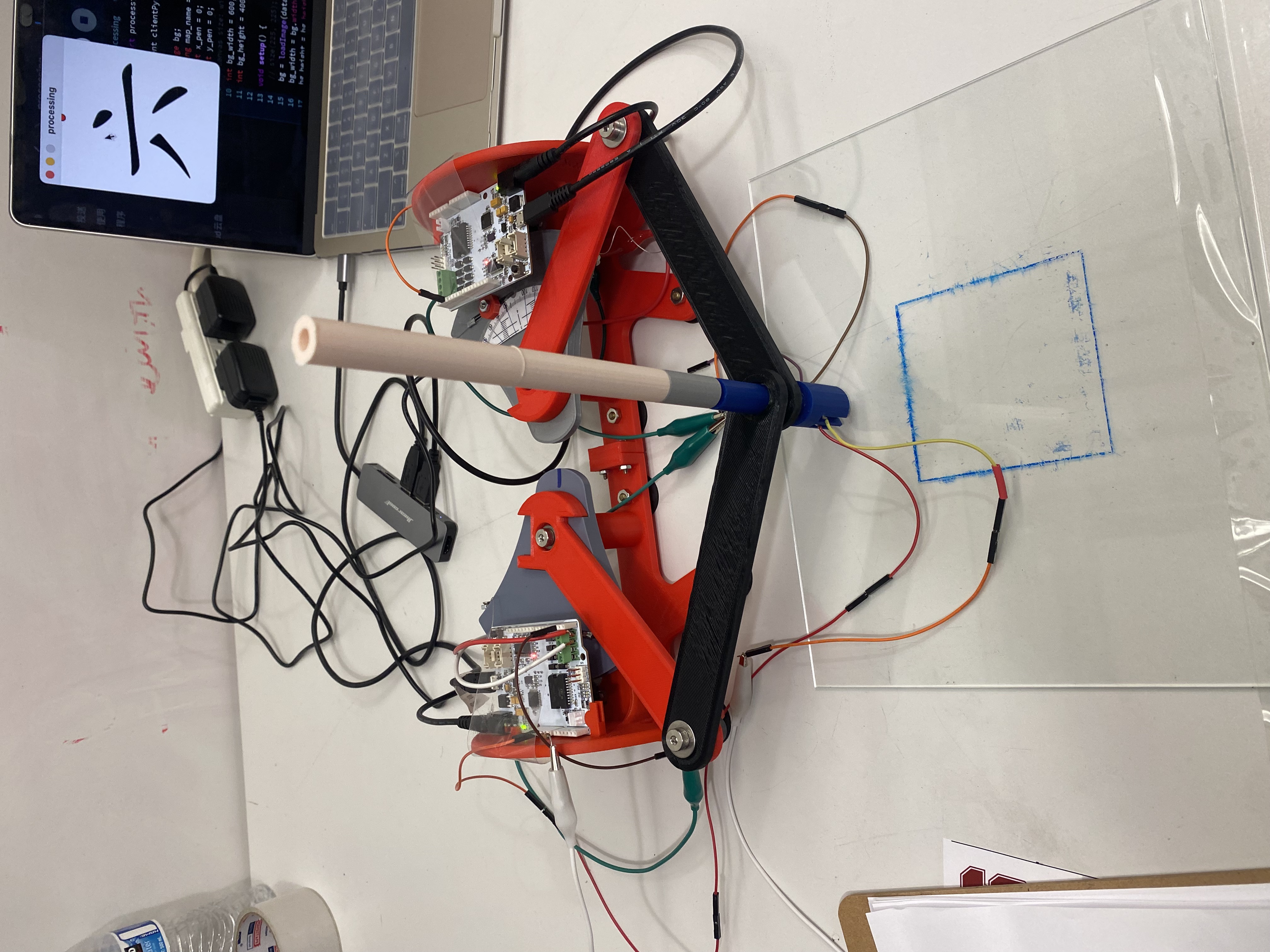

Team7 Members and the Hptic Calligraphy Training Device.

Calligraphy Training Device

Project team member(s): Harrison Guan, Xingze Dai, Austin Yang, Chester Pan

Chinese calligraphy, an art form valued for its aesthetic and meditative qualities, presents a steep learning curve for beginners, primarily due to the skill required in brush handling and stroke execution. To address these challenges, our team at ME327 project developed an innovative calligraphy training device incorporating haptic feedback technology. This device is designed to facilitate learning by providing tactile responses through a specially engineered pen linked to motion-tracking hapkits, which guide users along the correct stroke paths.

The hardware setup features a pantograph mechanism that allows free movement of the pen across a 2D plane, ensuring realistic simulation of traditional calligraphy strokes. Complementing this, our software utilizes a Python-based pipeline to generate dynamic feedback aligned with digital stroke patterns, enhancing muscle memory and reducing beginner errors. Preliminary testing at our Haptics Open House demonstrated the device’s potential to lower the traditional barriers to learning calligraphy. Feedback from users highlighted the intuitive nature of the technology, though it also pointed out areas for enhancement, particularly in spatial resolution and feedback accuracy.

Looking forward, we plan to refine the device’s feedback mechanisms and expand its capability to handle more complex characters and larger writing spaces. These improvements aim to make the art of Chinese calligraphy more accessible, enjoyable, and less daunting for novices, potentially transforming the traditional learning process.

On this page... (hide)

Introduction

Chinese calligraphy, a revered art form with over two millennia of history, is the artistic practice of writing Chinese characters using brush and ink. And the difficulty of finely manipulating the ink brush and at the same time, architecting characters adds another level to its beauty. However, such difficulty imposes a threshold for beginners to master this art. When beginners start to practice for the first time, they cannot adeptly control the ink brush because of a lack of muscle training and muscle memory. The written characters may well be unsatisfactory for several months since Chinese calligraphy has a long learning curve, thus discouraging enthusiasts from continuing. Moreover, young learners, for example, may accidentally knock over ink tanks and cause damage to their surroundings. To enhance training and mitigate accidents, we plan to incorporate haptic feedback into a device that tracks correct handwriting paths. The device features a brush connected with two hapkits to track movement. Ideally, such device should make the learning process more efficient and friendly.

Background

Recent advancements in haptic feedback technology have significantly influenced the development of training systems for Chinese calligraphy, highlighting three crucial studies. Yeh et al.'s research introduced an interactive system that simulates the tactile feel of traditional painting brushes through dynamic physical models and ink-water transfer algorithms, paired with PHANTOM force feedback devices to enhance realism and stroke precision in calligraphy training. In parallel, Luo et al. (2023) utilized virtual reality combined with sponge-based haptic feedback, demonstrating the use of cost-effective materials to mimic the traditional brush's tactile sensation effectively, which proved to be optimal in their comparative study.

Building on these foundations, Wang et al. developed a high-fidelity haptic simulation system, incorporating detailed stroke modeling and a three-level task planning model. This system utilizes a state transition graph for dynamic simulation and a modified virtual fixture method to ensure stability and accuracy in feedback, thereby optimizing task execution and state management for learners. These studies collectively suggest modifications to haptic feedback mechanisms can enhance virtual calligraphy systems by providing more realistic and stable tactile sensing, thus preventing user frustration and improving skill acquisition. The overarching conclusion is that thoughtful integration of haptic feedback in training tools can greatly enhance the learning experience and accuracy of Chinese calligraphy techniques.

Methods

The diagram illustrates the data flow and system architecture of a our calligraphy training device. The core system utilizes a Python application that reads and processes character map data stored in a 'Map Folder.' This processed data is then sent via socket communication to the Processing software for visualization on a laptop, which displays the calligraphy strokes. Concurrently, the Python script sends torque and angle commands through serial communication to two hapkits, which physically guide the user's hand movements, providing tactile feedback as they mimic the strokes displayed on the screen.

Hardware Design and Implementation

To accomplish the task of calligraphy training that requires the pen movement freely on a 2D space, the pantograph structure was implemented. This requires several additional mechanical components besides the setup provided by two basic Hapkits: a fixed base for two motor-capstan-handle system, two linkages fixed on the handles, and two free linkages connect previous ones and the pen. Previous haptics project A Planar 2-DOF Haptic Device for Exploring Gravitational Fields and 2 Degree of Freedom Assistive Drawing Device also implemented this mechanics, and had shown the pantograph's capability on 2D space tasks. We adopted the pantograph resources provided by Hapkit Wiki: Hapkit Two-Degree-Of-Freedom Devices, Fabricated the required models by FDM, and assembled the structure.

During the production of the pantograph from the open-source Hapkit Wiki, we encountered several issues related to the tolerance and fitting of the linkages. After several iterations, we were able to achieve a proper fit for all parts, as illustrated in the figure below.

Our pen structure design is unique compared to previous teams, particularly in its application to Chinese calligraphy. As shown in the figure below (originally from The art of Chinese calligraphy), Chinese calligraphy requires holding the brush perpendicular to the writing surface, which can be challenging and exhausting during prolonged use but is necessary for proper technique. Our pen design minimizes tilting, maintaining an almost perpendicular angle to the writing surface, which aids users in developing muscle memory.

Additionally, we have implemented a bottom switch within the pen, as depicted in the figure below. This feature aims to simulate the natural writing pattern. In traditional writing, downward pressure is needed to engage the brush with the writing surface, while lifting the pen pauses the writing. Our design incorporates a bottom switch that activates force feedback when the pen is pressed downward, providing force guidance for training purposes. When the pen is lifted, the force feedback deactivates, allowing free movement.

Software and Visualization

Two separate efforts have been made on the software and visualization. We first created an automated Python pipeline to generate canvases for different characters, and then we developed the main haptic emulation program.

The pipeline will perform the following tasks: First, it reads all character images of any size and color in the resource folder. Second, it unifies the dimensions of the character images and creates canvases for them. Third, the resized image is blurred by convoluting with a 2D Gaussian filter and added to its self-unblurred copy element-wise. Fourth, the pipeline normalizes the summed image and calculates its image gradient in both the x and y directions. Finally, the 2D gradient is normalized. As a result, we obtain a spatial representation that maps every pose in the state space (i.e. on the canvas) to a 2D vector normal to the edge of a character. We deem this 2D vector to be collinear to the direction of the haptic feedback force at a specific state space, and it is trivial to show further that the haptic feedback force and the normal gradient have the same direction. Such a spatial representation is performed over the character database and stored separately, waiting to be utilized for force control.

The automated pipeline has a few advantages. First, it is capable of processing hundreds of characters in the character database within milliseconds. It makes the character preparation efficient and scalable. Second, the implementation of the pipeline greatly eliminates the difficulties in resource collection. The character database can be successfully generated once pictures of single characters are available of any dimension, any file format, and any color. Third, the gradient algorithm enables more complex geometries and structures. Compared to previous documented project attempts in this course, in which only simple geometries like a circle are implemented, we are able to realize haptic feedback over Chinese calligraphy that is geometrically and configurationally challenging. Fourth, the pipeline makes the calligraphy training not only scalable in size but scalable in variety. In this particular project, we chose Chinese calligraphy to be our demonstration example but the calligraphy training can be used for any other transcribable language.

Regarding the main program, the Python-driven haptic emulation program (the main program) has accomplished a strongly synchronized synthesis of hardware, software, and visualization. After investigating available and handy visualizers and GUIs, we decide to use Processing for visualization. We established socket communication between Python and Processing, enabling seamless data transfer and integration between the two environments. To facilitate real-time visual representation, we wrote Processing code to visualize the canvas, character, and pen stroke, ensuring an accurate and intuitive display of the drawing process. Furthermore, because of the hardware properties, we continue to use Arduino to drive our two hapkits, and these hapkits are connected to the computer by USB ports. We established two parallel serial communications between Python and Arduino, which is a critical step for hardware interaction and data acquisition.

Addressing the complexity of concurrent operations, we managed concurrency and latency issues across two Arduino ports and one Processing socket, ensuring smooth and efficient communication in terms of synchronization. Protective programming like interrupt handling and loss connection handling has been performed to ensure the robustness of the program. We also atomized our Python program by, for example, creating functions for coordinate transformations and controls. Atomization is essential for accurate data processing and easiness of debugging.

System Dynamics and Control

To make sure our pen can move freely in a plane, we are using Graphkit from Hapkit Two-Degree-Of-Freedom Devices as our two-degree-of-freedom device. The Graphkit kinematics are derived from "The Pantograph Mk-II: A Haptic Instrument". We also referenced group 4 from last year https://charm.stanford.edu/ME327/2023-Group4 for derivation. The kinematic structure is a five-bar planar linkage represented in the figure.

The pen is located at point P3 and moves in a plane with two degree-of-freedom with respect to the ground link, where the actuators are located at P1 and P5. The configuration of the device is determined by the position of the two angles θ1 and θ5 and the force at P3 is due to torques applied at joints 1 and 5. The position of P3 can be derived as follows:

After calculating the position of P3, we also need to calculate the torque applied by P1 and P5 that account for force on P3. This can be calculated by the equation:

The Jacobian matrix can be obtained by directly differentiating P3 with respect to θ1 and θ5.

where ∂i means the partial derivative with respect to θi. The further derivation is described in The Pantograph Mk-II: A Haptic Instrument as below: Let d = ∥P2 − P4∥, b = ∥P2 − PH ∥ and h = ∥P3 − PH ∥. Applying the chain rule to the position of P3:

With Jacobian, we can now control the force applied on P3 by controlling torques on P1 and P5. The torques needed on motors can then be calculated by the equation:

The control system is simplified as shown below.

Hapkit Angle Calculation: As illustrated in the figure above, the angles for the Hapkit are calculated using an Arduino board, following a methodology similar to what was covered in class.

Pen Position Calculation: Based on the dynamics discussed earlier, the position of the pen is determined and mapped to pixel positions.

Gradient Generation: After applying a blur to the map and generating gradients, we obtain the gradient for specific pixels.

Force Generation with P Controller: Utilizing a Proportional (P) controller, the gradient at specific pixels is used to generate force. A proportional gain coefficient amplifies the magnitude of the force feedback.

Torque Conversion: Finally, the generated force is converted to torque for the motor, completing the feedback loop.

This process ensures that the force feedback is accurately generated, providing users with the correct tactile sensation based on the gradient at specific pixels.

Results

The whole structure is shown below.

One simple demo is shown below.

We had the opportunity to demonstrate our innovative calligraphy training device at the Haptics Open House on June 4, 2024, having the teaching team, classmates, and a diverse group of participants engaged with our design. The system performed reliably throughout the event, with no major malfunctions, affirming its robustness and user-friendliness. This suggests that our device is well-suited for users of all skill levels and backgrounds. Despite the success, we recognized that due to limitations in time and available resources, the device did not fully meet the high standards of perfection we aim for, based on our theoretical intention. To ensure transparency and foster constructive feedback, we carefully explained the design rationale and the optimal user experience to each participant. We also designed a survey of 5 questions for the participants based on their experience on using our device. 9 participants gave the feedback and here is the results:

The feedback survey on our haptic feedback calligraphy project provides insightful reflections on user experiences and perceptions. The integration of haptic feedback into calligraphy training was notably well-received, demonstrating strong support for this innovative feature. We talked to one Ph.D student who is working on haptics for educational writing and training. She provided positive feedback on our idea, and also shared some informations on current research in the similar filed with us. The system’s instructional clarity and ease of use were also highlighted as easy to understand and study to use. However, the quality of the feedback force was identified as a potential area for enhancement, as it may not always align perfectly with the expected stroke motions. Additionally, the design of the button click, intended to replicate Chinese calligraphy motions, was generally appreciated, though there could be opportunities for further refinement. Overall, the project garnered positive reception, suggesting a solid foundation with room for specific improvements to enrich the learning experience further.

Some specific issue feedbacks are listed here:

- Some users noted that the force feedback could be subtle and at times inaccurate, occasionally directing the pen to unintended areas of the canvas. When they were going inside one stroke, they sometimes were dragged to other stroke area even they are surely holding the pen inside the stroke on the visual interface.

- There were also comments about the restricted workspace, with the device reaching its operational limits when the visual interface was not yet pushed to its boundaries. It is observed that the linkage bars will hit some part of the Hapkit fix structure that did not allow users to move further, even the rgaphics indicating the presents of stroke in that direction.

The feedback suggests that the primary challenges are associated with the device's current spatial and operational constraints. Using image gradients frees the labor of defining the feedback directions in all cases but at the same time introduces haptic confusion at some point. This is because image blurring enhances numerical stability at the cost of a fine definition of an edge. When two strokes cross each other, the gradient at the crossing is indeed radial; when the user draws off the stroke near the crossing, the device tries to hold the user back radially to the center of the crossing, instead of pushing them back perpendicularly to the stroke. The effect is noticeable as such radial feedback could have a tangential force component against the user and cause the user to coercively go off strokes.

Future Work

Based on the discussions with participants, we have outlined several strategic directions for further refinement and development of our device:

- By more clearly defining the sequences and directional flow of strokes, we can enhance the system's efficiency and reduce interference from adjacent stroke maps. This refinement will ensure that only the stroke being worked on is activated, which aligns more closely with the fundamental goals of calligraphy training.

- A redesign of the hardware is also under consideration. By expanding the workspace, we can accommodate more complex maps. This expansion will likely reduce the occurrence of inaccuracies that are more prevalent in confined spaces, thereby improving the overall efficacy and user experience of our training device.

Acknowledgments

Thanks Prof. Okamura and CAs for the whole quarter!

Thanks for those came to Open House and provided feedback!

Files

The CAD files of pen structure is attached here. Attach:Team7_CAD_Files.zip

The CAD files of main pantograph structure is attached here. Attach:2024_Team7_Pantograph.zip

The code and running instructions are attached here.

The cost sheet is attached here. Attach:Team7_Cost_Sheet.xlsx

References

[1] J. Muirhead, S. Schorr, "A Planar 2-DOF Haptic Device for Exploring Gravitational Fields" JARED AND SAM, 2012. [Online]. Available: https://charm.stanford.edu/ME327/JaredAndSam. Accessed: Jun. 5, 2024.

[2] L. Gunnarsson, R. Hall-Zazueta, S. Richardson, and X. Sahin, "2 Degree of Freedom Assistive Drawing Device", 2023-GROUP 4, 2023. [Online]. Available: https://charm.stanford.edu/ME327/2023-Group4. Accessed: Jun. 5, 2024.

[3] Hapkit, "Two-Degree-Of-Freedom Devices", [Online]. Available: https://hapkit.stanford.edu/twoDOF.html. Accessed: Jun. 5, 2024.

[4] I. Micunovic, "The art of Chinese calligraphy", 24 SEPTEMBER 2020, [Online]. Available: https://www.meer.com/en/63496-the-art-of-chinese-calligraphy. Accessed: Jun. 5, 2024.

[5] G. Campion, Q. Wang, and V. Hayward, “The pantograph mk-ii: a haptic instrument,” in 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2005. [Online]. Available: http://dx.doi.org/10.1109/IROS.2005.1545066

Appendix: Project Checkpoints

Checkpoint 1

Checkpoint 1 goal: By Thursday, May 23 at 11:59 pm, hardware mechanisms should be designed and ready to manufacture. The programming languages and environments should be decided and set up for virtual interactions.

Hardware

We've started researching appropriate structures. A pantograph structure can efficiently cover two-dimensional space. The example forward mechanism is described in Design and Control of a Pantograph Robot. Within our Hapkit system, Hapkit Two-Degree-Of-Freedom Devices provided example resources for mechanical structures fabrication. This setup is excellent for straightforward applications, as shown in previous projects that involve pantograph mechanisms, but the calligraphy training we envision demands a more nuanced approach. Our system needs to manage the specific stroke orders and lift motions that are integral to traditional path following tasks. Therefore, we want to modify the pantograph structure to adapt our system and are currently working on the modification. This involves additional components that mimic the "lifting pen" motion (but do not necessarily need to add degrees of freedom to the pantograph robot), and users should be able to easily access it during the writing behavior. Ideas like the side-touch of an Apple Pencil or a push button would be a good direction. We have developed some CAD models to customize the Hapkit Two-Degree-Of-Freedom Devices like adding springs to enable 'lifting pen" motion, and will start to manufacture them to test which works best this week. We are also considering adding a force sensor under the spring to check whether the pen is lifted. When lifted, the device will feel no force feedback since it should move freely over the page.

"Software‘

We decided to keep using the Arduino interface to program our device since we are using a Graphkit-based mechanism. For visualization, we are using Processing. We have researched alternatives to Processing because none of us are familiar with Java. However, the research turns out https://www.reddit.com/r/Python/comments/vjuiw/is_there_anything_like_processing_for_python/ that Processing is the best rendering software ad hoc. The closest alternative would be to use Javascript, which again none of us are familiar with, and the Python alternative package loses the convenience and some functionality. As a result, we will continue to use Processing as our rendering software with experiences from HW assignments. We have generated several simple shapes for the pen to follow. As shown in the graph is a simple ring. The inner and outer circles are treated as walls.

We are not limited to generating a virtual tube enclosing the ring as constructing a Chinese character would be way more complex in shape and structure. Creating the virtual tubes by hard coding would be a grave pain. Although we don't have a better solution yet, we are thinking of an automated pipeline, maybe utilizing AI, to generate the virtual tube enclosing any Chinese character that we stored in the Arduino beforehand.

We are now working separately in two threads. The first thread is to design paths for Chinese characters and render their forces feedback. The second thread is working on integrating mechanism dynamics into Arduino and Processing. We will essentially implement the constructed virtual tubes from the first thread in the code.

To sum up, we have met most of our Checkpoint 1 goals. The hardware design was a little behind schedule because we were trying to add some creative designs to the given Graphkit in order to meet our requirements. It was challenging for us to solve the "lifting pen" problem. After our testing, we may need to redesign our mechanism if the results are not ideal. Regarding the software, we are delighted to keep using Processing for the virtual rendering, and we are more delighted to see that we are able to generate a virtual ring in which we can follow the path. However, there are more challenges that we haven't thought of, such as generating the virtual tube for Chinese characters efficiently. As we come up with a solution to this, we can proceed to implement it in the Arduino and Processing.

Checkpoint 2

Hardware

The Pantograph structure was successfully assembled. We utilized the component of four Hapkits, printed addtional parts from Graphkit wiki, and connected them together. However, we did not used the pen-holder linkage provided by Graphkit, since we customized the pen structure according to our system. We used the same arm link for both sides (The black ones in the picture).

The pen is designed separately with the feture "lifting pen". When people writing, usually they press the pen downward, which is the same idea as we used here. As shown in figure below, there are two parts of the pen. There is a bottom attached to the lower part, which could be triggered when the upper part being pressed downward. In this case, we imitated the writing habit of people. When people press the pen downward, the bottom is pressed and the system has the force feedback to teach the calligraphy. When people lift the pen, the bottom is released and the system has no force feedback and people could move it freely.

The video below is an example of our bottom.

Attach:Team7Checkpoint2V_bottom.mp4

Software

For this checkpoint, we have successfully completed a series of crucial tasks on the software that significantly advance our project's development. First, we selected a representative set of Chinese characters formatted suitably for training purposes, ensuring that they encompass a broad range of linguistic features and are optimized for our algorithms. Following this, we developed an automated Python pipeline designed to create visualizations bases, streamlining the visualization process and enabling efficient data analysis.

In building the entire program structure, we utilized Python to ensure a robust and scalable framework that supports our project's requirements. Additionally, we established socket communication between Python and Processing, enabling seamless data transfer and integration between the two environments. To facilitate real-time visual representation, we wrote Processing code to visualize the canvas, character, and pen stroke, ensuring an accurate and intuitive display of the drawing process.

Furthermore, we established serial communication between Python and Arduino, a critical step for hardware interaction and data acquisition. Addressing the complexity of concurrent operations, we managed concurrency and latency issues across two Arduino ports and one Processing socket, ensuring smooth and efficient communication. We also atomized our Python program by creating some functions for coordinate transformations and calculations, which are essential for accurate data processing and analysis.

Lastly, We initiated and implemented a novel virtual wall feedback mechanism, providing a responsive and interactive element to the system while ensuring low latency for the online algorithm, enhancing user experience and system functionality.

Attached below is an example of our entire virtual environment and visualization without an actual hardware data input stream.